3 min readOne-Sided vs Two-Sided Test - A simple guide

Learn the difference between one and two sided tests. Learn when to use each type, avoid costly mistakes, and run better A/B tests with confidence.

One-Sided vs Two-Sided Test - A simple guide

Your new homepage design is crushing it. Early data shows a 15% lift in signups. Time to pop the champagne? 🍾

Not so fast.

The type of statistical test you use – one-sided or two-sided – could be the difference between celebrating a real win or shipping a silent killer.

Let me explain this in plain English (promise, no PhD required).

- One-sided test = "Is B better than A?" (only checking one direction) ➡️

- Two-sided test = "Is B different from A?" (checking both better AND worse) ↔️

- Rule of thumb: If you're not 100% sure the change could backfire, use two-sided (ex: a new hero section with lower conversion rate) 🛡️

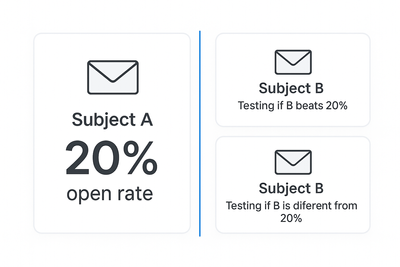

The Email Subject Line Example 📧

Here's the clearest example I can give you:

- Your current email subject line: Gets 20% open rate

- Your new subject line: Uses emojis and urgency

A one-sided test hypothesis would be: "Is the new subject line BETTER than 20%?"

- ✅ You're only checking if it beats 20%

- ❌ If it gets 15%, you don't detect this as significant

- 🎲 You're betting it can only improve

A two-sided test hypothesis would be: "Is the new subject line DIFFERENT from 20%?"

- ✅ You're checking if it's better OR worse than 20%

- ✅ If it gets 15% OR 25%, both are detected

- 🛡️ You're open to any change

The key difference? One-sided ignores bad outcomes. Two-sided catches everything.

Real Example: Netflix's Skip Intro Button 🎬

When Netflix tested their "Skip Intro" button, they could have used:

- One-sided test: "Will adding Skip Intro increase watch time?" (assuming it can only help)

- Two-sided test: "Will Skip Intro change watch time?" (open to any effect)

Good thing they likely used two-sided! Some shows have iconic intros (think Game of Thrones) where skipping might actually reduce engagement.

Quick Comparison 📊

| Aspect | One-Sided Test | Two-Sided Test |

|---|---|---|

| Question | Is B better than A? | Is B different from A? |

| Detects | Changes in ONE direction only ⬆️ | Changes in BOTH directions ↕️ |

| Risk | ⚠️ Might miss harmful effects | ✅ More conservative |

| Best For | Obvious improvements | Most A/B tests |

A Real Example That Shows Why This Matters 🚨

Imagine you tested a new "streamlined" checkout process:

Results after 10,000 visitors:

- 🟢 Original checkout: 2.0% conversion rate

- 🆕 New checkout: 2.3% conversion rate

- 📊 p-value (two-sided): 0.08

- 📊 p-value (one-sided): 0.04

With a one-sided test: "New checkout wins! p = 0.04 < 0.05" ✅ With a two-sided test: "Not significant. p = 0.08 > 0.05" ❌

Here's the catch: You were so confident it would improve conversions that you only looked for improvements. But what if you'd run this test during Black Friday when your regular checkout actually performs better? A two-sided test would have protected you from missing potential harm.

The danger? One-sided tests can find "significance" more easily, but they're blind to negative effects. Choose wisely.

What Are These Tests, Really? 🤔

One-Sided Test (One-Tailed Test) 🎯

Think of it as a metal detector that only beeps for gold, ignoring everything else.

The mindset: "I only care if B beats A. If B loses, I don't need to know."

Real A/B testing use case: You're testing whether reducing form fields improves completion. You're certain it won't make things worse (less fields = less friction, right?).

Famous Example: Amazon's "1-Click Ordering" 🛒 When Amazon tested removing ALL friction from checkout, they used a one-sided test. Why? Because making purchasing easier couldn't possibly reduce sales. They were right - it increased purchases by 5%.

Two-Sided Test (Two-Tailed Test) 👀

This is like having a security system that alerts you to ANY unusual activity – whether someone's breaking in OR breaking out.

The mindset: "I need to know if B is different from A, period. Better or worse both matter."

Real A/B testing use case: Complete checkout redesign. It could boost conversions OR confuse the hell out of users.

Famous Example: Walmart's $1.85 Million Mistake 💸 Walmart redesigned their entire website in 2009, confident it would improve sales. They should have used a two-sided test! The "cleaner" design actually decreased conversions by 27%. They quickly reverted after losing millions.

The Trade-Off Nobody Talks About ⚡

Here's a little secret: one-sided tests are "easier" to win.

With the same confidence level (95%):

- One-sided test: Needs ~20% less data to find significance 📉

- Two-sided test: Needs more data but catches effects in both directions 📈

It's tempting to go one-sided for faster results. But remember: with great power comes great responsibility to not mess up up your metrics.

When to Use Each

| Use One-Sided When | Use Two-Sided When |

|---|---|

| Direction is obvious ➡️ • Free shipping badges • Faster page load • Lower prices • Amazon Prime badge | Direction is unclear ❓ • Button colors (red vs green) • Long vs short copy • Different layouts • Airbnb's host photos |

| Low risk + low traffic 🐢 • Minor copy tweaks • Small UI improvements • Adding trust signals • "As seen on TV" badges | High risk or high traffic 🚀 • Checkout flow changes • Pricing page redesigns • Core feature changes • Facebook's news feed |

| Prior evidence exists 📚 • Proven patterns (social proof) • Industry best practices • Previous test learnings • Adding reviews | Testing something new 🆕 • Novel approaches • Experimental features • Untested hypotheses • Snapchat Stories (at launch) |

The Math 🧮

I promised no PhD stuff, but here's the simple version:

| Test Type | How It Works | What It Means |

|---|---|---|

| Two-sided (95% confidence) | • 2.5% error chance each direction • Total 5% error rate • p < 0.05 to win | Harder to reach significance but catches all changes ✅ |

| One-sided (95% confidence) | • 5% error chance in one direction • 0% in other direction • p < 0.05 to win | Easier to reach significance but blind to opposite effects ⚠️ |

Translation: Same data can be "not significant" two-sided but "significant" one-sided.

Learn from real-life examples

One-Sided examples

Marks & Spencer's £150 Million Website 📉 In 2014, M&S launched a "mobile-first" redesign, confident it would boost sales. They essentially ran a one-sided mindset test. Result? The confusing navigation caused an 8% drop in online sales. Cost: millions in lost revenue.

JCPenney's "No Sales" Strategy 🏷️ CEO Ron Johnson was SO confident that "everyday low prices" would beat "fake sales" that they didn't properly test both directions. Revenue dropped 25% in one year. Sometimes customers want to feel like they're getting a deal!

Two-Sided examples

Google's 41 Shades of Blue 🔵 Google famously tested 41 shades of blue for links using two-sided tests. The winning shade generated $200M+ in additional revenue. A one-sided test might have missed this if they'd only looked for "improvements" from their designer's favorite.

Amazon's Product Recommendations 📦 When Amazon tested "Customers who bought this also bought...", they used two-sided testing. Good thing - early versions actually DECREASED sales on certain categories (overwhelmed customers). They iterated until it worked.

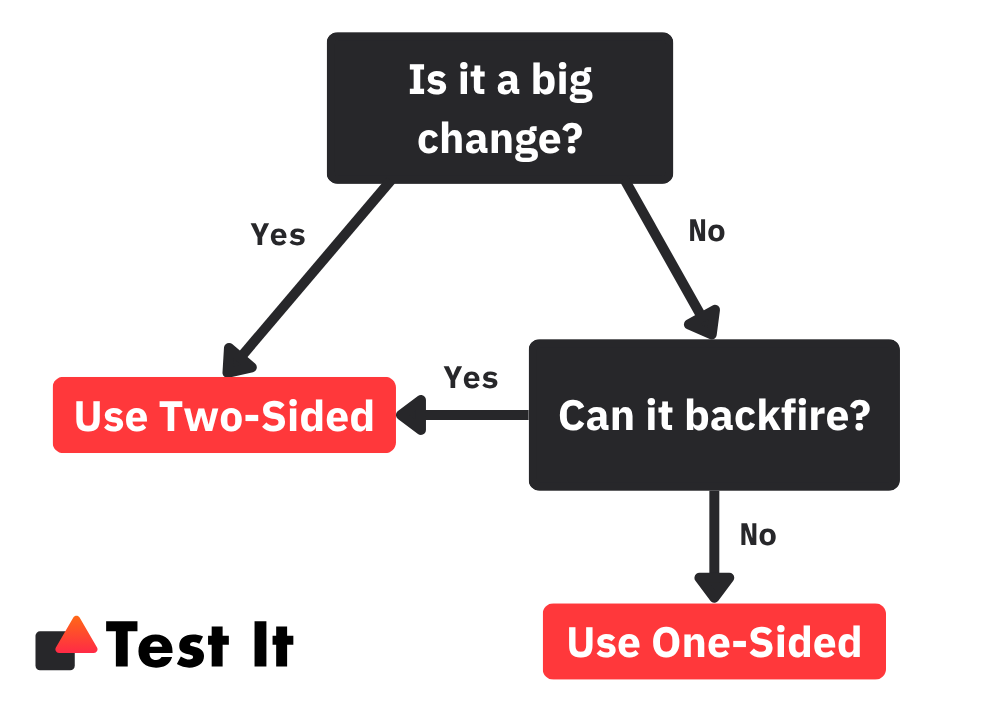

Your Decision Framework

Ask yourself:

- Could this change possibly backfire? → If yes, TWO-SIDED ↔️

- Would I be shocked if it performed worse? → If no, TWO-SIDED ↔️

- Am I picking one-sided just for speed? → If yes, TWO-SIDED ↔️

- Have I already peeked at results? → If yes, TWO-SIDED ↔️

Still unsure? Default to two-sided, it's the safest option.

The Bottom Line 🎯

Use two-sided tests by default. They're like insurance – slightly annoying but essential when things go wrong.

Only go one-sided when:

- ✅ You have rock-solid reasoning

- ✅ The opposite effect is truly impossible

- ✅ You've documented why BEFORE launching

Remember: The goal isn't to rack up wins. It's to make genuinely better decisions to improve results.

Because shipping a "winner" that silently hurts your business? That's the ultimate loss 😉

Ready to Run Better Tests?

Put this knowledge into practice! Test It is the easiest and most affordable way to run A/B tests. Get started for free.

Try Test It Now